8 Min. Read

May 10, 2025

How to start Working in AI Risk Management & Model Auditing

Learn about roles in AI auditing, red teaming, compliance, and how to start your career in AI risk management and auditing.

_11zon.webp)

What Is AI Risk Management All About?

As much as AI systems can be brilliant, they can also be liability risks. That is precisely why we require personnel who do not only develop AI, but also evaluate, test, and ensure its functionality and compliance with established procedures. If you are the type of person who wonders “How do we prevent AI system from causing damage?” then risk management and auditing artificial intelligence model might be the right fit for you. You do not need to be an intense coder. What is necessary is curiosity, a structured thought process, and a desire to mitigate problems before crises arise.

This article will give you an idea about this particular field, its associated career pathways, necessary competencies, and how to get started—even if you are new.

The real risk isn’t that AI will become too intelligent. The real risk is that we will entrust it with power we don’t understand. - Harini Suresh, AI researcher at MIT

Why AI Risk Management Matters Now (Not Later)

AI is being deployed in high-stakes areas like healthcare, hiring, finance, national security, and criminal justice. But:

- Many AI systems make decisions we do not fully understand.

- Most companies lack strong oversight structures.

- Risks can include bias, unfairness, system failure, or manipulation.

Real-world examples:

In 2023, a lawsuit highlighted an instance where a healthcare AI system was found to have denied older patients care repeatedly due to ageist biases. Audit teams found bias in facial recognition tools used by police. Numerous fictitious reports and malicious software have been disseminated by generative AI models.

All these instances may seem like simple bad publicity; however, with AI, when something goes wrong there is no undoing the damage. Risk assessors, model auditors, red teamers, and safety reviewers are now being added to the workforce of advanced AI system design.

Suggested Read: How to Start a Career in Technical AI Safety

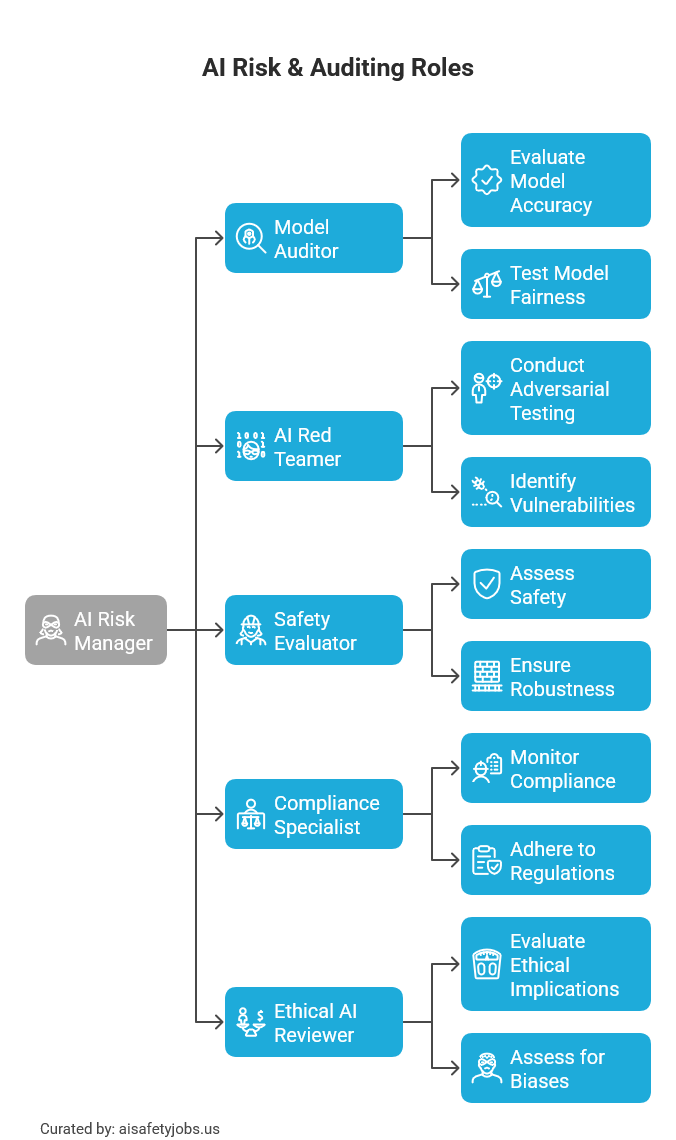

Roles You Can Explore in AI Risk Management & Auditing

In AI risk, you do not need to be an engineer, but rather need to have a structured system of thinking along with responsibility. Consider the following roles:

- AI Risk Manager: Works within legal bounds to identify, assess, and track AI risks in an organization’s operations. Interfaces with legal, compliance, product, and engineering teams.

- Model Auditor: Examines datasets for bias, fairness, and safety compliance. Tests model outputs and confirms adherence to transparency benchmarks.

- AI Red Teamer: Tests and interacts with systems pre-launch by trying to find exploits, focusing on manipulative prompt exploitation, system exploitation, and abuse case discovery.

- Safety Evaluator: Designs benchmarks on AI provocation in edge scenarios for stress testing.

- AI Compliance Specialist: Ensures systems policies and externally enforced regulations such as the EU AI Act or HIPAA are followed.

- Ethical AI Reviewer: Analyzes AI implementation regarding its rationale and proportionality, assessing the “why” of building AI systems alongside the intended tasks, user consent, and transparent processes.

These roles exist in various context:

- Tech companies posing internal safety and product review challenges for Google, Microsoft, OpenAI, Anthropic.

- Consulting firms such as McKinsey, BCG, or Accenture assisting clients with regulatory foresight.

- Startups providing proprietary AI audit services.

- Government agencies responsible for the regulation of AI systems employed in public. Search for job boards such as AI Safety Jobs USA , LInkedin etc.

The goal of AI safety isn’t to halt progress. It’s to make sure progress doesn’t harm the people it’s meant to serve. - Sara Hooker, Director of Cohere for AI

Skills You Need (And How to Get Them)

Most AI auditing and risk jobs need a mix of analytical thinking, communication, and systems literacy. Here's a breakdown:

Core Skills:

- Risk assessment frameworks (ISO 31000, NIST AI RMF)

- Scenario planning and threat modeling.

- Understanding of machine learning basics (no need to code deeply)

- Communication—explaining risk clearly to non-experts.

- Ethics and regulatory awareness (e.g. GDPR, EU AI Act)

- Basic data analysis (Excel, Python, or SQL helps)

How to Learn Them:

- NIST AI Risk Management Framework

- AI Incident Database — real cases of AI going wrong

- [AI Governance and Auditing Bootcamps – FLI, GovAI, BlueDot]

- Partnership on AI resources

How to Stand Out: What Most People Don’t Do

One should consider that contemplating the news is popular, however, very few actively take action towards working with it. The following can be used to help you stand out from the rest:

- Run Mini Risk Audits Yourself: Use a public AI tool like ChatGPT, an AI scanner for resumes, or Google Gemini. Apply them critically and document all the ways you think they can generate unfair or discriminatory outputs. Write a mini-audit report and share it on LinkedIn or Medium. This reflects that you have taken initiative and are doing the work.

- Create a Risk Portfolio: Consider your career as a portfolio and it will be created in the manner of a builder puts together a design portfolio. Include bias assessments, ethical assessments, compliance_check summaries, red teaming exercises, and evaluations. Even two to three decent ones are bound to make an impression.

- Participate in Watchdog Projects: There is a great community but projects like Alignment Assemblies or AI Incident Database frequently require assistance. Offer to review cases or draft findings for them to build community credibility.

- Simulate Red Team Exercises: Using open-source models, try to find adversarial prompts, edge-case failures, or jailbreaks. Document your procedures and share the results of your findings.

- Explain Risk in simple Language: Write easy to understand blog articles or threads on the subjects of model risks, AI regulation, or tangible harm AI interfaces. Technical posts are found everywhere and most social media users share them, but when things are explained simply, common users will find greater value and share those making you more valuable.

- Cross-Train on Compliance or Law: A number of risk positions relate to policies and regulations. Knowing key parts of the EU AI Act or U.S. AI Bill of Rights; even at a surface level—makes you more effective and rare.

- Show Strategic Thinking: Risk is not just about listing dangers. It is about trade-offs. If you can discuss when not to block a model; or how to mitigate risk without stopping progress; you will show maturity and leadership potential.

Every AI system has blind spots. Risk management is how we make sure those blind spots don’t become black holes. - Sasha Luccioni, AI Researcher & Climate Lead at Hugging Face

Next Steps: Your AI Risk Career Starter Plan

- Choose a Focus Areas: Begin with topics that interest you the most. Bias in hiring algorithms? Deepfake detection? The safety of Artificial Intelligence in healthcare? Your passion will motivate the learning process.

- Track Real-World Cases: Revisit actual failures with the help of the AI Incident Database or the newsletter AI Snake Oil. Ask yourself, why did it fail? What could have been done to prevent such failures?

- Follow Experts: Scholars like Sara Hooker from Cohere, Sasha Luccioni of Hugging Face, Aviv Ovadya from ETHICX, and Irene Solaiman also from Hugging Face don’t shy away from sharing their knowledge and insights regularly.

- Join the Groups: Participate in groups such as the EA Policy & Risk groups, AI Governance Slack, Women in AI Ethics, or Red Team Village. Ask questions. Learn the language.

- Write Risk Assessments: Using one AI product, write a memo of one-page answering the following questions: What risks exist? How likely are the risks? What is their impact? How should we mitigate the risks?

- Attend Inexpensive Courses: Begin with BlueDot’s AI Governance track or FLI’s AI auditing workshop. Courses like these will furnish you with the basic language, the right tools, and even mentors needed.

- Apply to Internships or Junior Positions: Go after junior positions within responsible AI divisions or within Ethics & Compliance. CAPstone gives you real world experience, even if it’s just for a little while.

- Keep Track of Your Progress: Whether it’s a Notion page, a blog site, or multiple posts on LinkedIn, create a public document that showcases your learning. Transparency builds trust—and recruiters love to see consistent learners.

- Interview Practitioners: Reach out to someone doing AI risk work. Ask about the path they follow, e.g., What surprised them? What skills mattered most?. One good conversation can reshape your career.

- Stay Curious and Patient: This space is very young. Job titles change. Tools evolve. Keep learning steadily, and opportunities will follow.

Conclusion

AI risk management is not just about stopping disastors, but also building trust. Each secure system, each well-banked model, every responsible rollout helps protect people. It holds corporations answerable. And it enhances the safety of innovation for all. If by preventing issues from going public and protecting real people from harms make you feel vital, then you’re wanted.

Stop postponing. Pick up the fundamentals. Become active. Engage in the tasks prior to receiving 'appropriate credentialing.' That's the reality for most true specialists.

AI is moving fast. But thoughtful people like you can help it move safely.

Do I need a technical background to start a career in AI risk management?

Not always. While having a background in technology is beneficial, many AI risk management positions, such as compliance analyst or ethical AI reviewer, place greater emphasis on system understanding, policy intelligence, and critical analysis over coding.

What skills are important for AI auditing and model risk assessment jobs?

Key skills for AI auditing careers include working knowledge of risk frameworks (like NIST AI RMF) and data laws, analyzing model outputs with potential bias or failure for auditing purposes, and robust communication of risk data.

What’s the difference between AI red teaming, AI auditing, and AI compliance roles?

Some of these roles are AI red teaming, which simulates attacks or misuse scenarios; AI auditing checks models for biases and ensures transparent processes; and AI compliance ascertains systems operate within and across legal and ethical boundaries. These roles are all part of a more comprehensive AI risk management framework.

How can I build experience for an AI risk management career if I’m just starting out?

Start building your portfolio and begin your risk management career by auditing public models such as ChatGPT or Gemini, writing short memos on risk, contributing to open-source safety projects, and publicly sharing your insights through blogs or portfolios.

Which industries are hiring for AI auditing and risk management jobs right now?

AI auditing and risk roles are growing fast in many fields, such as tech, healthcare, finance, consulting, and government agencies; especially those focused on AI safety, governance, and regulatory compliance.

(10).webp)

(9).webp)

.svg)